Introduction

Purpose

Outline best practices for secure, private, applicable, and ethical use of artificial intelligence (AI) technologies, so that state entities can feel comfortable exploring innovative ways to enhance the citizen experience and productivity.

This guideline highlights common risks, challenges, and legal considerations when using this technology.

Scope

This guideline applies to all North Dakota executive branch state agencies including the University Systems Office but excluding other higher education institutions, i.e., campuses and agricultural and research centers. All other state agencies outside the scope of this policy are encouraged to adopt this guideline or use it to frame their own.

Definitions

Artificial Intelligence (AI) – A field in computer science that focuses on independent decisions based on supervised and unsupervised learning.

Machine Learning (ML) – A subfield of AI that focuses on the development of algorithms and statistical models to make independent decisions, but still needs humans to guide and correct inaccurate information. ML is the most common type of AI.

Large Language Models (LLMs) – A type of AI that has been trained on large amounts of text and datasets to understand existing content and generate original content.

Deep Learning – A subfield of machine learning that focuses on algorithms that adaptively learn from data without instruction or labeling. Also referred to as "unsupervised learning."

Examples: self-driving cars, facial recognition, ChatGPT et al.

Generative AI – A type of AI that uses machine learning to generate new outputs based on training data. Generative AI algorithms can produce brand new content in the form of images, text, audio, code, or synthetic data.

Business Owner – an Entity’s senior or executive team member who is responsible for the security and privacy interests of organizational systems and supporting mission and business functions.

Data Owner – individual/individuals responsible and accountable for data assets.

Data Steward – individual/individuals with assigned responsibility for the direct operational-level management of data.

Guidelines

North Dakota state government branches, agencies, and entities are responsible for developing and administering policies, standards, and guidelines to protect the confidentiality, integrity, and/or availability of state data. This guideline was developed to supplement the Artificial Intelligence Policy, to prevent the misuse of AI and ML technologies, minimize security and privacy risk, and reduce potential exposure of sensitive organizational or regulatory data.

Additional AI education and resources can be found at the Artificial Intelligence page of statewide intranet. You will need state issued credentials to access these materials.

Security and Privacy

AI/ML systems are designed to adapt to data input and output, putting privacy at risk when sensitive data is utilized as input. Data entered in public AI/ML services is not secure and not protected from unauthorized access. These public AI/ML services may incorporate input into their learning model, which can expose data as output to other individuals.

- If the data/business owner is evaluating AI for a business use case, submit an Initiative Intake Request via the NDIT Self-Service Portal.

- The State of North Dakota will consult the National Institute of Standards and Technology (NIST) Special Publication (SP) 1270 Towards a Standard for Identifying and Managing Bias in Artificial Intelligence, NIST AI 100-1 Artificial Intelligence Risk Management Framework, and other regulatory frameworks for guidance on AI best practices.

- Use of state-issued email address for the creation of user-ids for free and public AI resources (i.e. ChatGPT) is not recommended. However, if necessary, it is permitted if you use a unique password.

- Personally managed accounts created with personal email usernames are recommended for exploratory use of public services while use of state-issued credentials is recommended for enterprise AI solutions.

- Use of state issued email as the username for 3rd party accounts, used for public services, may cause confusion or technical issues down the road. For example, if the State pursues an enterprise offering on the same platform and connects it to the State single sign on solution, one might end up with two similar logins for that platform. This is because, there is typically a tie-in to managed State accounts, which uses state issued email addresses as part of the login by default.

- Personally managed accounts created with personal email usernames are recommended for exploratory use of public services while use of state-issued credentials is recommended for enterprise AI solutions.

Bias and Accuracy

AI/ML technology services are only as accurate as their datasets. All data have some level of bias. AI applications will reflect any bias present in the data on which it is trained, as AI/ML technologies do not understand your data. As with any use of data, it is important to have a thorough understanding of your data quality, if your data is a representative sample of the population it represents, and what any deficiencies might mean for the usefulness of your analysis.

Data can also be compromised by individuals with malicious intent and result in intentionally biased data, tailored propaganda, or inaccurate results. Output from AI can also result in unintentional bias.

- Accuracy of output should be evaluated prior to use, and evaluated regularly thereafter,

- Users should always use trusted and reliable sources for confirming information results that come from AI/ML. Decisions made using biased analysis might have unintended consequences for the populations we serve.

- Approved vendor or internal AI/ML services require periodic quality assurance checks to ensure AI-driven outcomes are accurate.

- Verify for accuracy prior to use and exclude moderate-risk and high-risk data following the Data Classification Policy.

Approved AI or ML enhancement products may include browser plugins, mobile applications, websites, etc.

With popularity of new AI/ML technologies, threat actors have created legitimate-looking browser plugins, mobile applications, and other technologies that are used for malicious intent.

Business Use Cases

- Content Curation: Drafting, refining, editing, reviewing, and creating stylized writing.

- Emails

- Presentations

- Memos

- Marketing

- Emails

- Text Summarization

- Preliminary Research

- Chatbot: Customer Service and Support

- Programming/Code Generation

- Automation

- Media Creation: Audio, Video, and Images

- Prediction and other analytics application

Examples of Acceptable Use

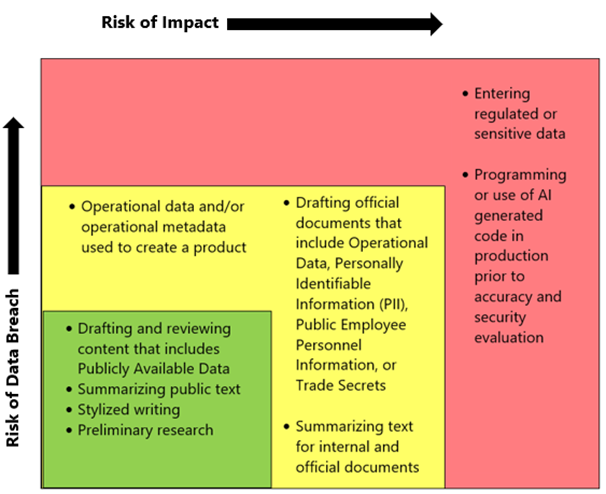

The following are some examples of acceptable use and non-acceptable use of publicly available or privately managed common AI tools. The key is to understand who is managing your user identity and be aware of the sensitivity of data you may be exposing and to follow standard password management practices.

The value of acquiring enterprise versions of such tools is to allow for acceptable use in more sensitive use cases. Enterprise versions of tools provide more safeguards control of the flow of data in and out of these models. This allows for using the same tools for more internal scenarios such as system configuration, internal policies, and other information that is not shared outside the organization or certain teams.

How do I know if I’m using an enterprise version of a tool? Here are a few tips for helping you determine this:

- Typically, if you need to self-register to use the tool, this usually means the State is not managing this user account. If it was a managed account, you’d be able to sign in the State Credentials without having to register and create password. This is the same account used to sign into your computer.

- Such accounts are managed by the vendor and used to keep your search history and preferences tied to your account for useability purposes. There is no tie to any other accounts, even if you reuse the same email/username.

- When using a public or privately managed service, it does not ensure the data you input (questions your ask) or the responses you receive is private or secure. It’s comparable to signing into google, then using search engine.

The following examples highlight the differences of using some popular tools vs an enterprise solution built on the same technology.

Example Scenario: Creating a policy or process for your organization | |

Available Tool: Publicly available version ChatGPT by OpenAI | |

Considerations: Tool readily available, but most care is required when sharing data | |

Acceptable Use | Not Acceptable Use |

|

|

Internal or sensitive information refers to data outlined in the “Data Classification Policy” as medium and high-risk data. | |

*The AI Risk Matrix and use of data following the Data Classification Policy is applicable to most AI. Use of data within approved AI Enterprise solutions is dependent on the results of a risk assessment and impact analysis conducted by the NDIT Governance, Risk, and Compliance (GRC) Team, and Executive Leadership Team (ELT) approval.

- Users and agencies may also check with their Technology Business Partner (TBP) and Information Security Officer (ISO) if unsure on appropriate business use cases.

Questions on AI can also be sent to aiquestions@nd.gov.